Hi. My name is Charlie Deck. I am a digital designer, engineer and researcher.

I've created tools, games, apps, and creative toys ranging from iPad synthesizers to VR games to AI coding tools.

My mission at work is to push the frontier of how technology can help us fulfill and exceed our potential -- i.e., land the benefit of AI and future software for the good of us all.

I'm in London. Reach out if you want to chat.

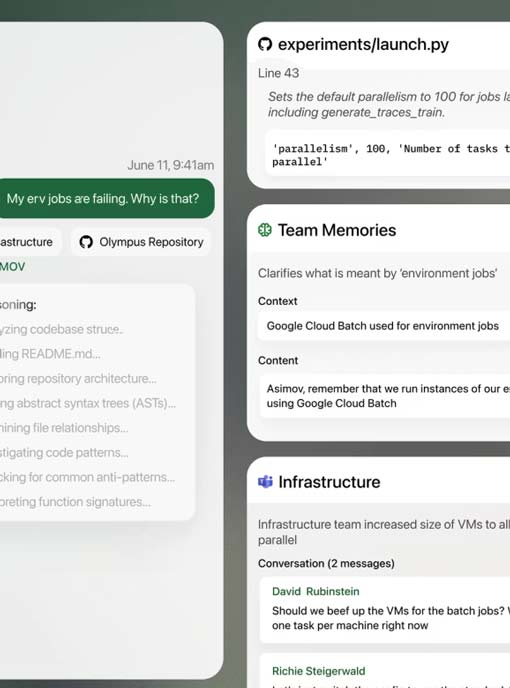

Work. Since Q4 2024, I've been working on cutting-edge product applications of AI at Reflection, where we're building superintelligence for enterprises. My work focuses on translating cutting-edge AI research into practical tools that augment engineering teams' capabilities.

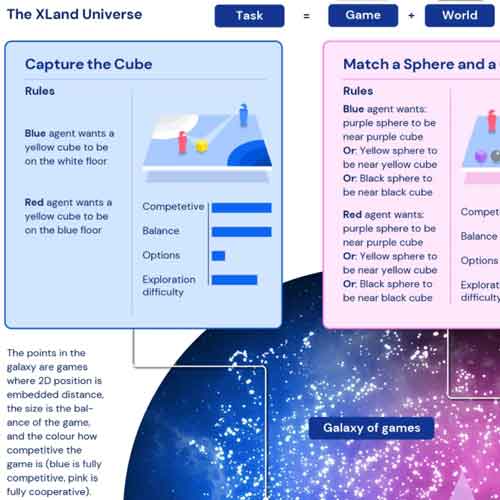

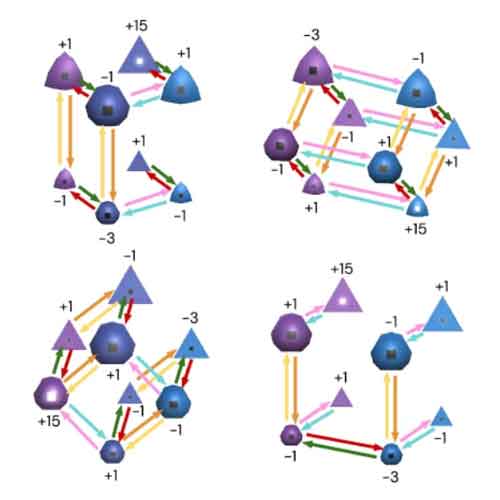

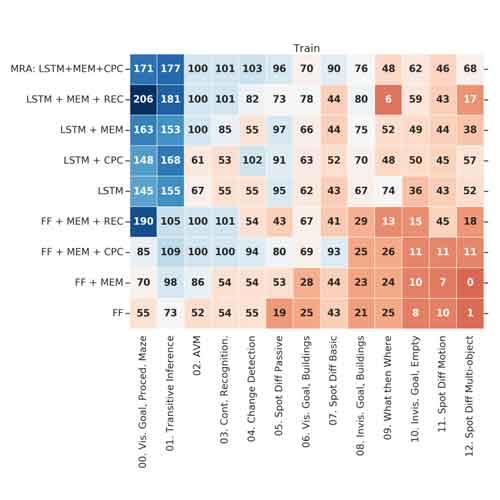

Previously, I was on Google DeepMind's AI experience team, where I built tools, prototyped, and surveyed research for transformative AI. There, I led demos across engineering and research teams working on generative AI, aligning research and tech strategy to accelerate DeepMind's research agenda. Then, and now, I actively support the inclusive and collaborative working environment conducive to ambitious mission-driven work.

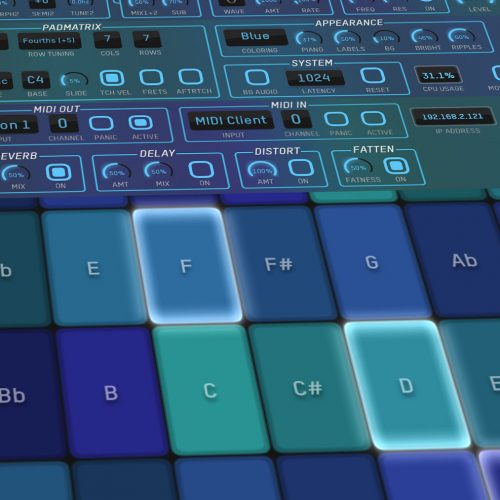

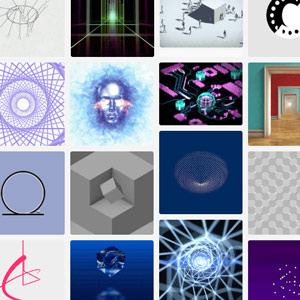

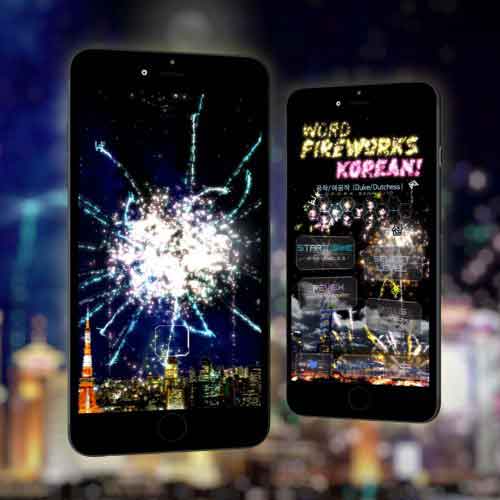

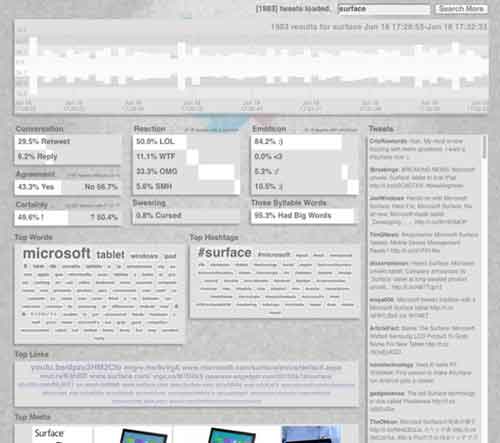

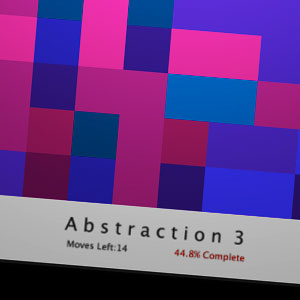

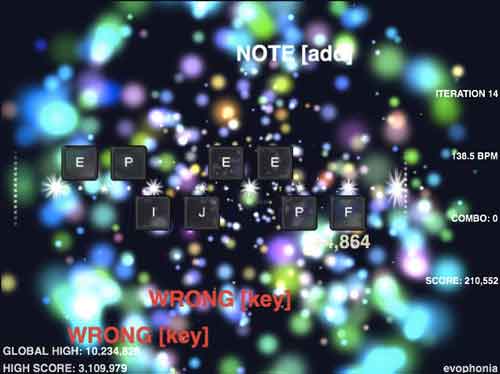

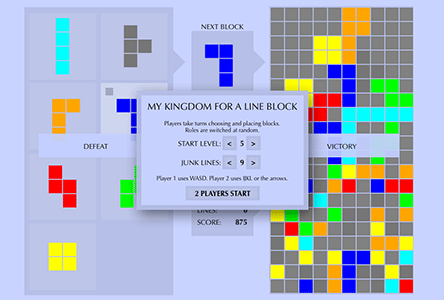

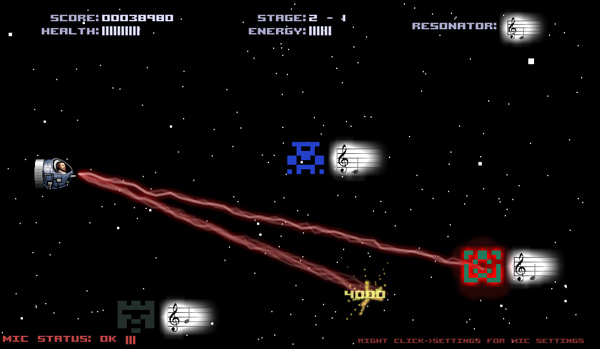

Past work. Pre-DeepMind, I reached millions of users as I independently created a variety of products across mobile, web, and desktop platforms.

I chased interesting problems, tackling novel UX and design challenges. I created every aspect of the resulting products.

The result was a diverse corpus consisting of creative apps, off-beat games, and interactive art pieces. Some are commercial releases; others are experiments.