infinite storyteller

“Infinite Storyteller” is a generative choose-your-own-adventure, told with state-of-the-art speech synthesis, generative imagery, and presented as a Twitch broadcast in a real-time, 3d game engine.

In March, 2023, I took some time to play with procedural narrative and generative AI. I wanted to achieve a procedural narrative that changed dramatically with user input — something along the lines of a choose-your-own-adventure book. I wanted to see how much story quality I could (affordably) wring out of the large language models available to me. I also wanted to make it shareable – generally SFW and accessible to the widest possible audience.

I’d been messing around generating creative fiction with various generatie language models for a while. So I was feeling bullish that I could prompt-engineer a mechanism that could generate plausibly-interesting story-chunks and fork-in-the-road choices. I knew I’d have to be smart about it, as this would cost some money — essentially paying the API by the word (or rather, token.)

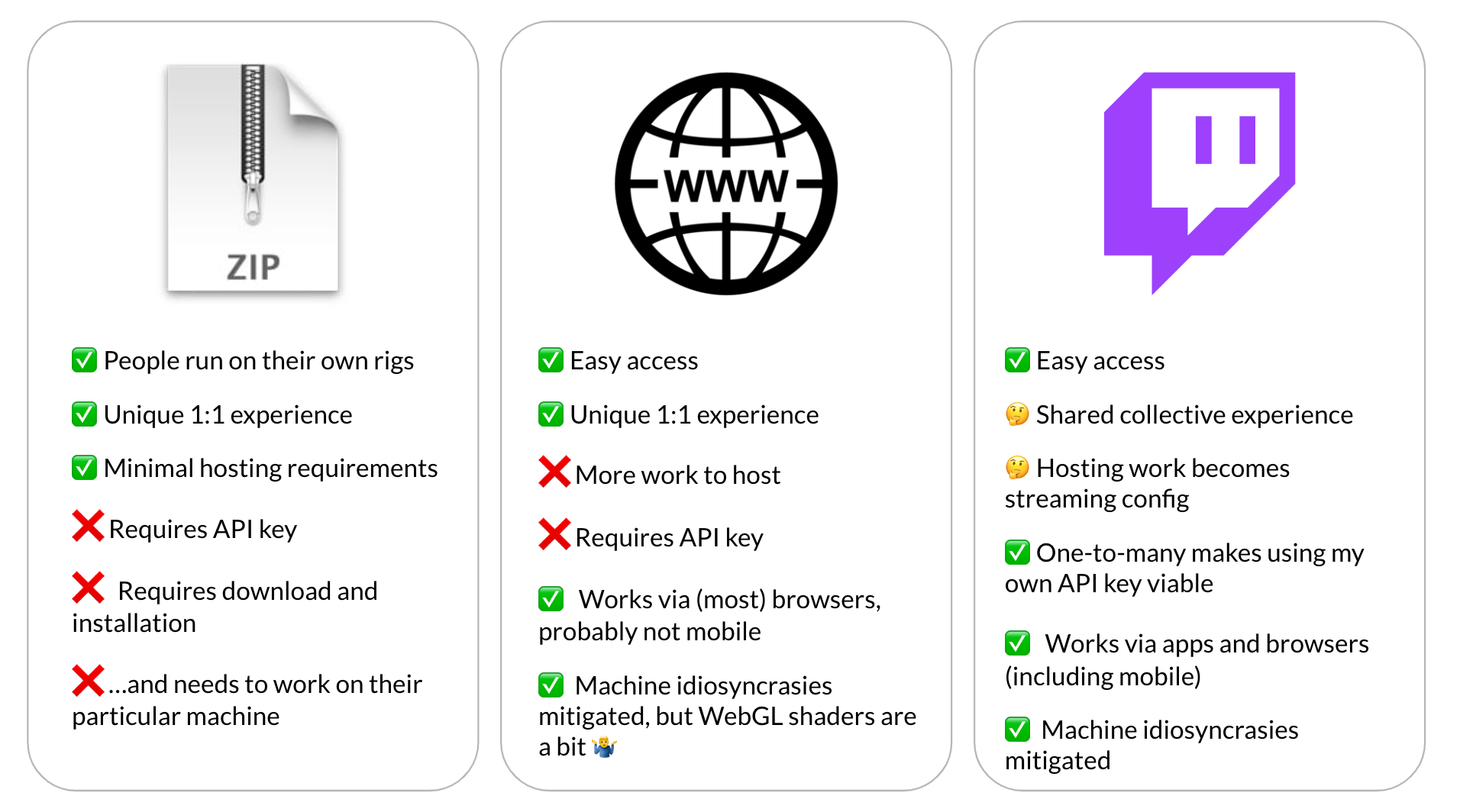

First, I needed to figure out what sort of artifact I was going to build. Shipping a binary – the standard game-jam operating procedure – would add a ton of friction to users trying access the experience. Hosting a web-based game is better but would still require user-supplied API keys for the generative AI bits. I decided to sidestep these issues by broadcasting these stories on Twitch and contriving a way to automatically interact with the chat.

Having settled on a Twitch-broadcast choose-your-own-adventure experience, the challenge remained of how rich and interesting I could make it given the few days off I’d given myself for the project. The result was a real kitchen-sink of generative AI tech.

Visuals

Partly inspired by the many story read-aloud videos my kids watch on YouTube, the experience consists of a simulated, literal book being read by an off-screen, benign narrator.

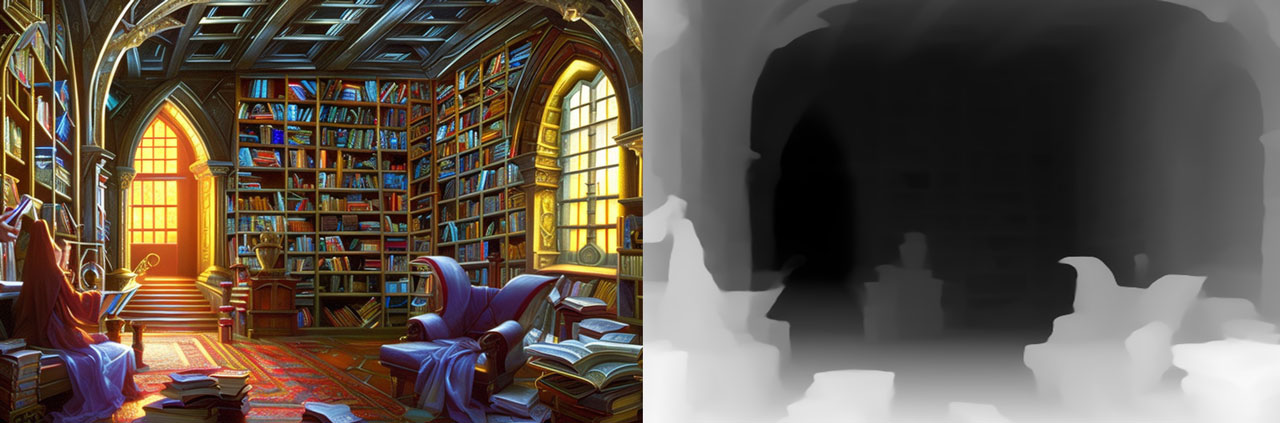

To make the background more visually interesting, I generated images of fantastical libraries with Stable Diffusion and induce this cool zooming effect:

This effect is achieved by using a recent MiDAS model to estimate the depth and using that depth estimate to distort the textured image’s geometry. As you dolly the camera forwards and backwards, it looks as if you’re moving through the scene. A keen eye will spot moments where the perspective shift reveals where the 2d image doesn’t actually have enough information to fill a 3d scene. It’s not perfect but I think it’s pretty cool. It’s a thematically-consistent bit of eye-candy.

The book came from the Unity asset store – it’s a simple vertex-weighted model with a single loose “page” in the model. A shader takes care of the animation.

The book covers were also generated with Stable Diffusion — I used prompts that referred to “antique book covers” — and a bit of Photoshop work to make them cleanly map to the model.

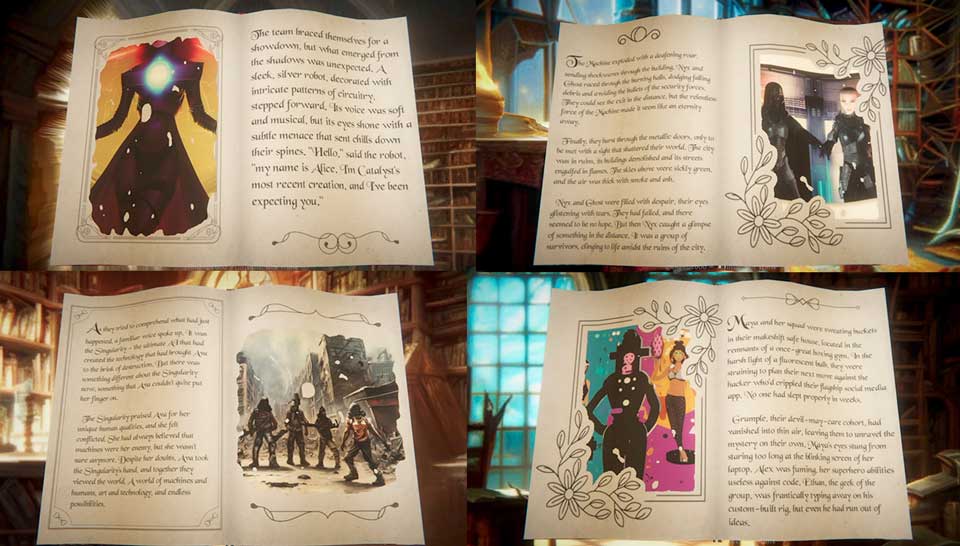

There’s a lot to talk about in the page contents. I decided each set of facing pages would have an illustration page and a prose page. I created around a dozen templates for each type of page, and these are picked at random as the story progresses.

In the prose pages, text is rendered as a SDF quads via Unity’s TextMeshPro component. TMP auto-resizes the text to fit the region given it, but to keep things from getting too small, I preprocess the story into sensible-length page-chunks. For a bit more olde storybook flair, chapters start with a large capital letter.

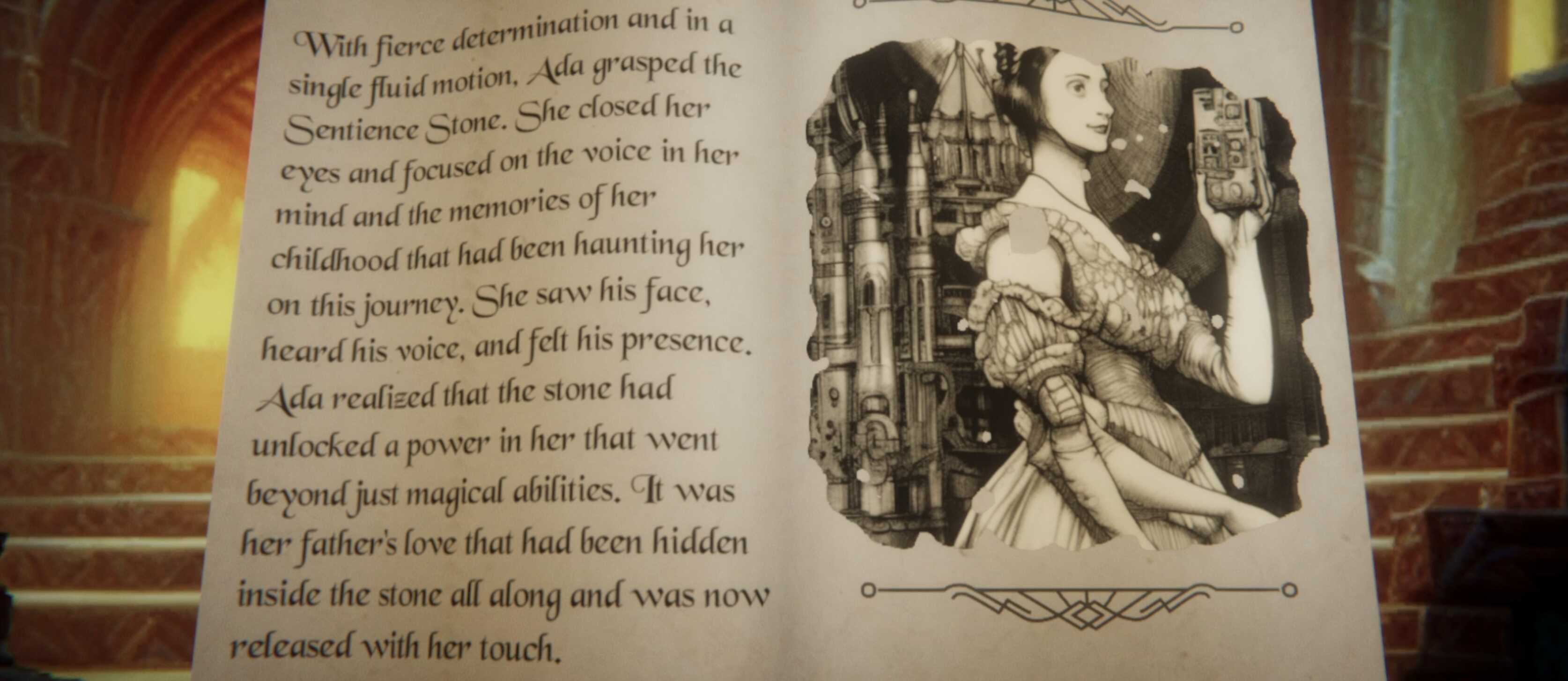

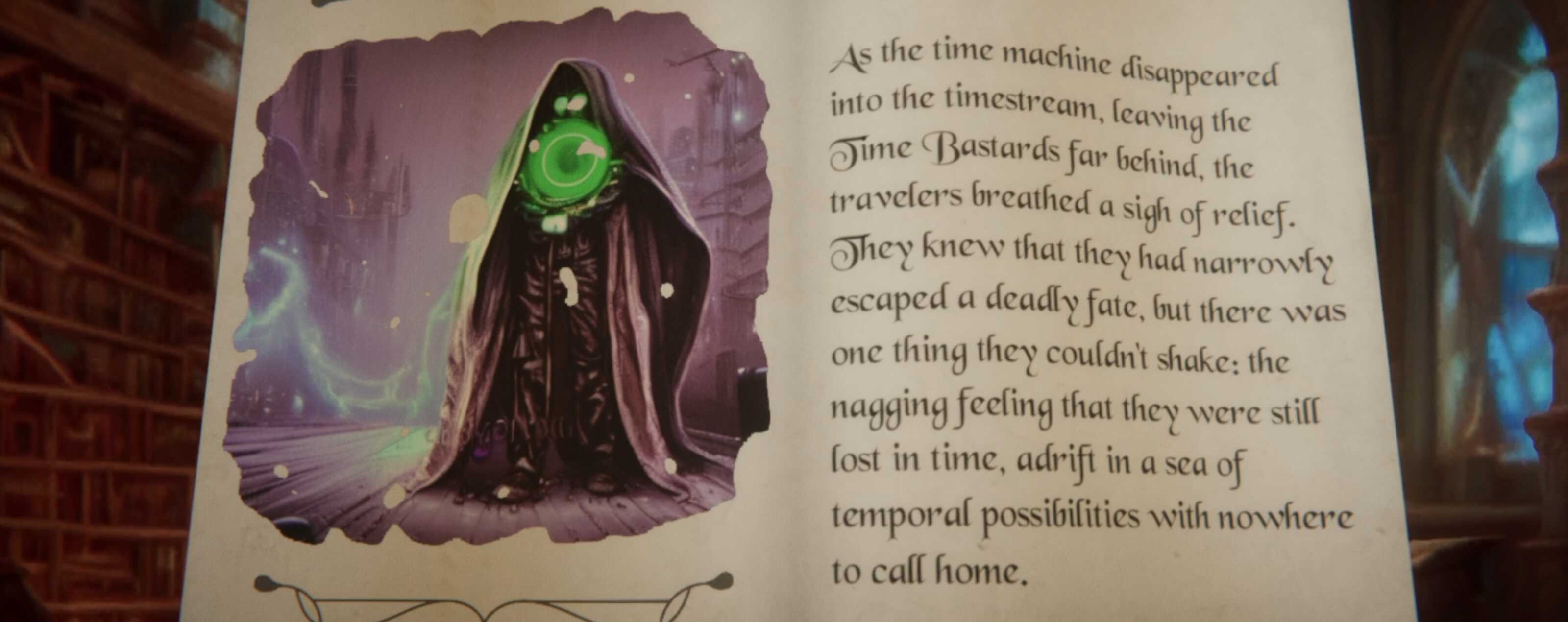

Image pages feature an image generated by txt2img models — I integrated both DALL-E 2 and Stable Diffusion. (The caption process is below.) Some templates matte the image onto the page via a mask, creating a stylised “illuminated” effect.

Both prose and image pages feature a variety of ornaments, which were licensed from Envato. (Creating a procedural system for generating those would be a fun project, though…)

Generative text

The bulk of the interesting work of this project was in and around the prompt engineering. I built a small prompt-chaining module that encapsulated prompt templates, output format specification, parsing, and retry strategies. Then came the alchemy of iterating on the prompts themselves: prompts to generate story ideas, introductions, story synopses, chapters, captions, story choices. Below are some details on this process. For each of those prompts, there’s personas, the number and character of few-shot examples to include, what additional context to include for story consistency, and finally what specific instructions to give the model – all of these represent richly-free parameters to iterate upon.

Below, I’ll run through some aspects of the text pipeline.

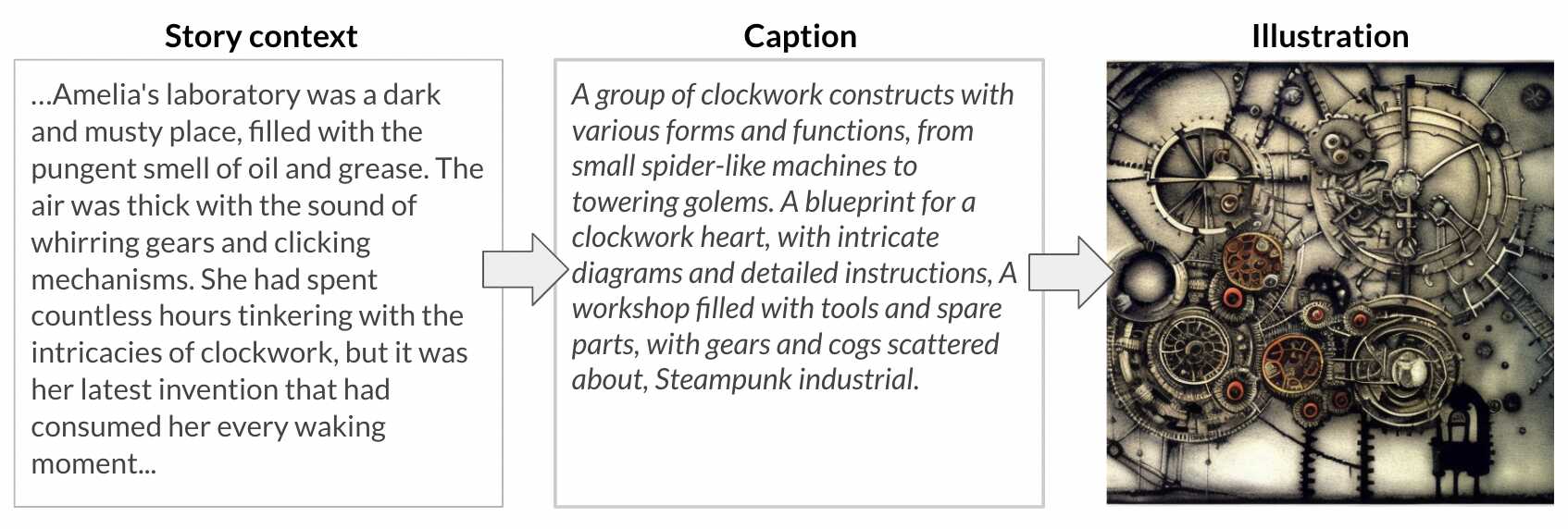

The caption that generates the images comes from a completion resulting from a few-shot prompt that processes the latest story scene. The output is a kind of structured scene description. I didn’t get this to quite where I wanted — I think properly-sensible illustrations might require a different approach like DreamBooth-ing your hero characters.

The story generation has a few notable components.

Stories begin as concepts, consisting of title-synopsis pairs. These are freshly generated from a few-shot prompt that draws from a hand-culled pool of interesting “concept” examples. Three such concepts are presented to the audience at the start of a story cycle: which story do you want to hear?

The narrative itself is broken up into short-chapter-length chunks – maybe five hundred words over a few displayed pages of prose. A chunk is generated; if the story doesn’t end, interesting and dramatic story choices are generated. The pick of those choices informs the subsequent chunk.

Choice generation is its own prompt chain. I found it useful to have as much control as possible to induce the model to create specific, dramatic, and interesting choices.

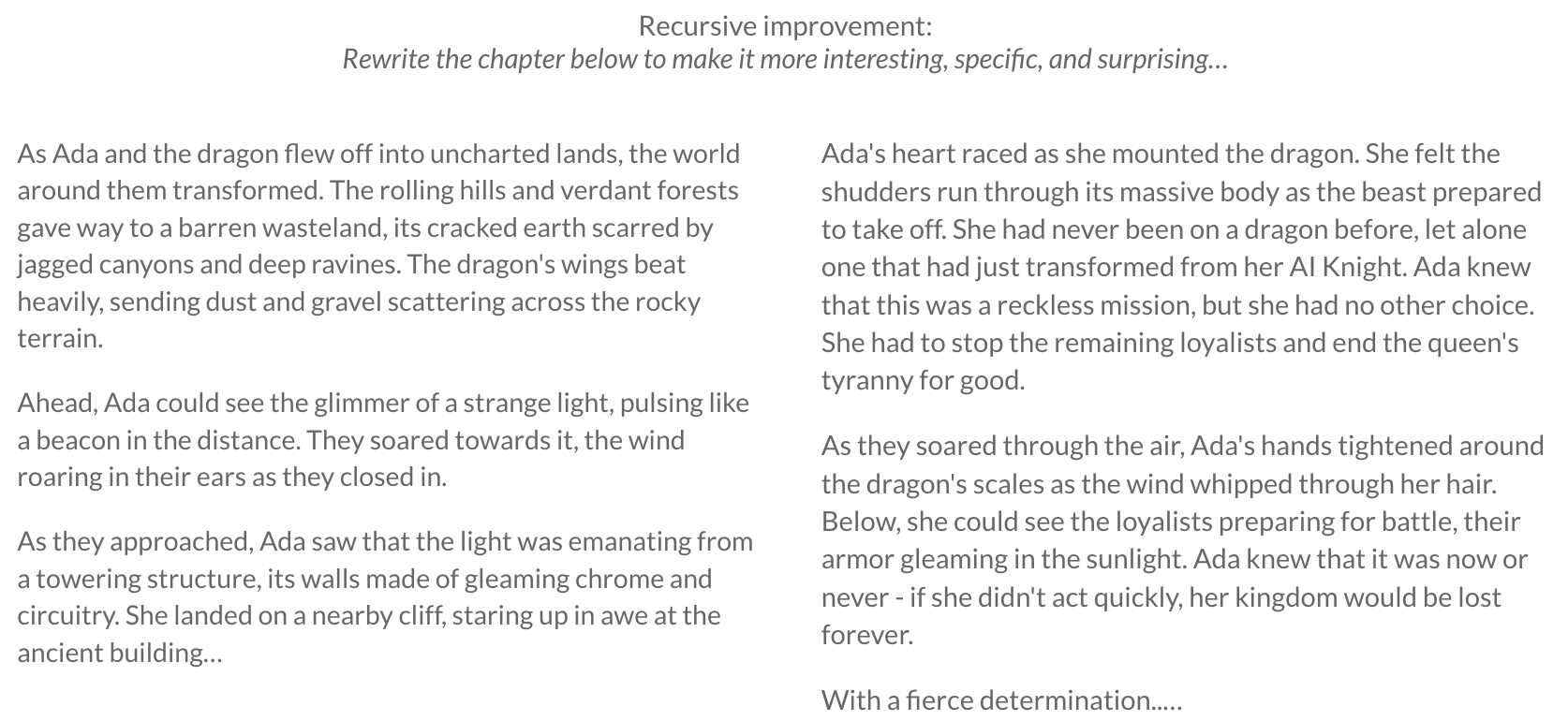

Also, along with story concept generation and story chunk generation, story choices benefited from some “improvement cycles”, whereby the model is asked to recursively improve the results along various axes. Typically, I was encouraging the model to make the output more specific and interesting.

Naturally these “improvement cycles” take time…and indeed, time would become the central challenge of this project, discussed below. Moreover, I found that with ChatGPT (GPT 3.5) the text wasn’t always strictly better, but just rewritten, often with narrative discontinuities. That said, it seemed a net positive. (Preliminary experiments showed this is a more robustly-successful strategy with stronger language models.)

One final aspect worth discussing is the voice narration. I built this in the peak of excitement around Elevenlabs’ impressive speech synthesis. I began by integrating Azure neural voices, of which I’d had a good impression from various reading apps. These were lamentably corporate for reading fiction. I was working on this project during the peak of hype around Elevenlabs’ impressive speech synthesis, so I followed up with integrating a voice clone of myself. This was cool, until I found myself repeatedly upgrading by budget allocations even as I struggled with latency and service availability. So I added a third option by my own Coqui-TTS endpoint on HuggingFace as an affordable compromise.

Twitch integration worked via Twitch chat. The project low-point was wasting half a day going through all the hoops to enable Twitch API hooks for Twitch’s built-in voting only to discover that only accredited Twitch partners have access to that functionality. (Oops, RTM.) In lieu of the fancy UI-integrated voting, I built a vote-bot. “Type a,b, or c in the chat” is more true to the Twitch spirit anyway.

Finally, I configured a Paperspace node to run the game, the Twitch bit, and OBS to stream to Twitch. Behold – the humble beginnings of my generative media empire in the cloud!

Code architecture

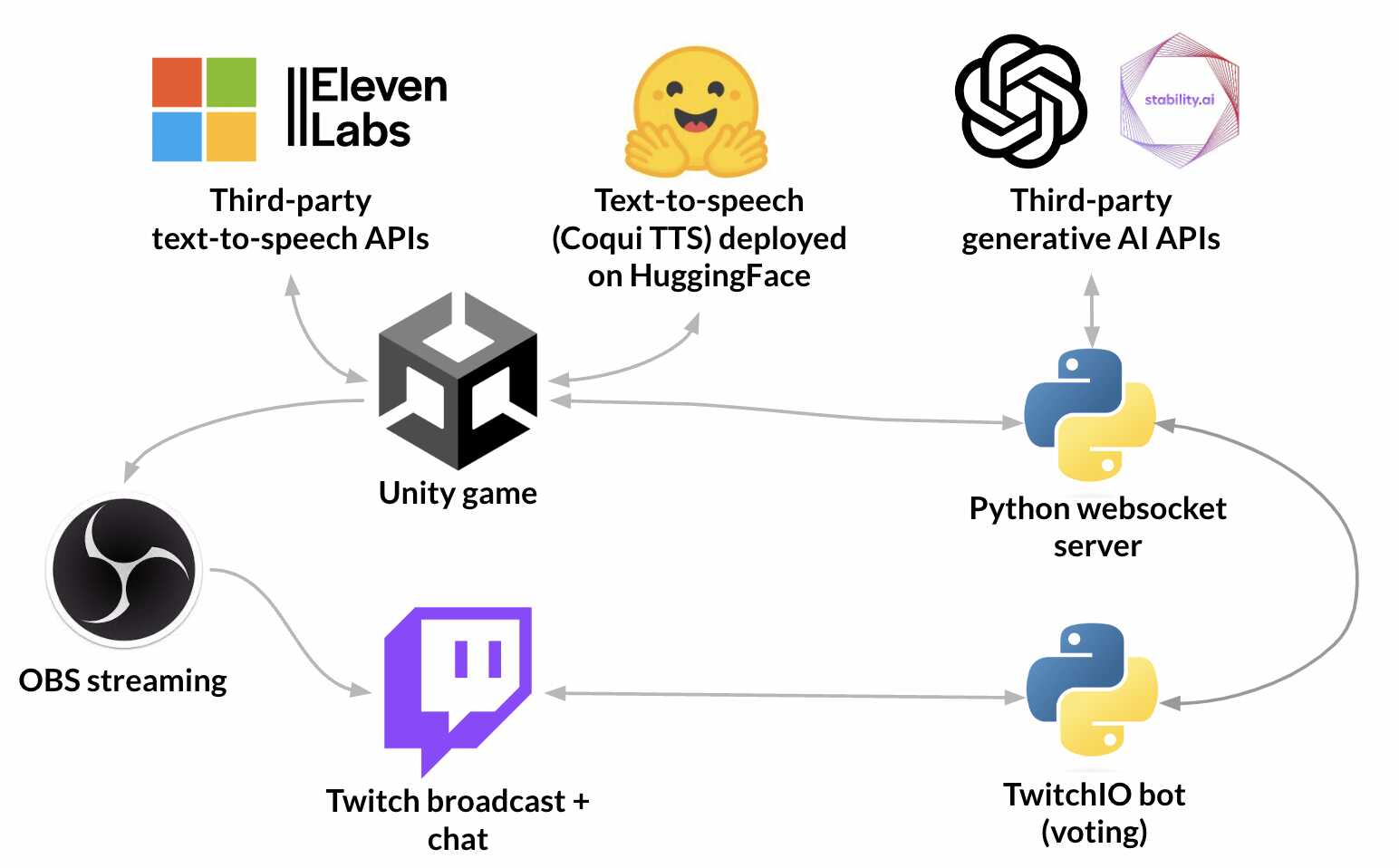

That’s it for the feature run-down. The hard bit was contriving a sensible way for these things to fit together.

The Unity game had the responsibility to request content from a Python server that called various external APIs, generating and storing content. All of this could have been done in C#, but the text processing and API wrapping would have been a pain to build. I’m sure there’s a smart way to do it—a separate C# project, say—but my conviction then and now is that for these sorts of tasks, the logic was going to be built faster and better in Python. The TTS lived in Unity-land since Unity sometimes needed to be in charge of last-mile text processsing for page and dialog box displays, which were in turn tightly-coupled with TTS output.

The separation of the Twitch bot and the Python server is a little awkward. Their mutually-exclusive, hermetic run loops required some kind of asynchronous message queuing mechanism. I knew I’d get myself in trouble trying roll my own for this little corner of the system, so I sought some pre-baked solution. RabbitMQ addressed that but it felt like significant overkill. (The alternatives seemed sketchy or stale – and the whole point was to avoid troubleshooting this aspect.)

Back in Unityland, my ambition was to enable a clean, declarative sequence to run the show. DoThis, DoThat, StartVote. Unity coroutines are wonderful for this, and I was delighted to find convenience functions like WaitUntil.

My efforts to reduce latency complicated things. Certain story generation stages were taking too long, causing long pauses in the story presentation.

DoThis, DoThat became the more complicated “DoThis but prep for DoThat as soon as condition is met, then DoThatOtherThing once you’re done and the key data has been received”. Where this really bit me was when I started to try to optimize the websocket server’s API consumption as well – I found myself tuning both server and client to be greedy, which signalled that the client-server metaphor was beginning to melt. This sort of asynchronous overlapping control flow results in cognitive overload. (You may have spotted a recent HN post on this: Fragile narrow laggy asynchronous mismatched pipes kill productivity - if your “pipe” is a stochastic generative model all these problems are magnified.)

Still, I was doing myself no favors wandering into this classic case of tangled concerns – a routine danger for freewheeling prototyping. I think I was a bit stuck in a REST mindset. A cleaner approach (towards which I made some headway) was to have the Unity client signal what kinds of data it was hungry for, and let the API server optimally triage those concerns.

You can see the system’s “greedy”, generate-as-far-ahead-of-time-as-possible process flow in the diagram above.

Reflections

The result is superficially cool, but doesn’t bear much scrutiny. Despite my cajoling the language model to improve its text, be more specific, and give some life to action, the resulting stories are filled with these sorts of passages:

The queen’s guard charged at her, his sword gleaming in the dim light. Ada dodged and weaved, her heart pounding in her chest. She knew she had to keep moving if she wanted to survive. As the battle raged on, Ada felt the weight of the entire kingdom on her shoulders. She had to end this fight, no matter what the cost. Finally, with a desperate lunge, Ada plunged her sword into the guard’s chest, watching as he crumpled to the ground. The room fell silent, save for the hum of the machines.

or…

Sophie and her allies worked tirelessly, using their powers to heal the forest. But as they worked, they discovered something even more terrifying. A dark presence had taken root in the forest, and it was stronger than they realized. With Luna’s help, Sophie delved deeper into the Memory Tree’s secrets, uncovering a dark force that threatened to consume the entire world. The forest was just the beginning.

or…

But as the game grew in popularity, so did the challenges. Injustice still plagued humanity, and it was all too easy for players to lose themselves in the game’s captivating beauty. Amelia knew that the true purpose was to inspire, to find solutions to problems, and to make a difference in the real world. She remained vigilant, determined to jump back into the fray whenever the need arose. Despite the obstacles, Amelia found the perfect balance, creating a world where everyone could experience a little bit of enchantment while still making a difference where it mattered. It was not always easy, but with the support of her companions, she persevered. And as she gazed out at the virtual world around her, she knew that it was worth it.

By now, I’ve heard so many of these bland wrap-ups of the most interesting, seemingly-action-packed climaxes of stories that they induce an overexposure-to-VR type feeling in me.

I’ve noticed that very little moment-to-moment action happens. There’s hardly any dialogue for character interaction. The text feels like writing about a story – the model is generating a story treatment, rather than something that has the texture of an experience meant for a reader. These stories are like a ten-page Publisher’s Weekly synopsis.

Generated images were hit and miss.

Generated images were hit and miss.

Despite the context given in the relevant prompts, the resulting generated images are usually barely grounded to the story content. I suspect that even the perfect caption would only have a fighting chance of Stable Diffusion making a coherent, self-consistent illustration–and I’m definitely not generating the perfect Stable Diffusion prompts. That said, the resulting images so reliably distant from the story, the reader can easily make metaphorical or impressionistic connections. What we get are visual hallucinations inspired by the story – and that’s still a net-positive, experience-enriching element.

This prototype’s presentation could be improved in all sorts of ways. Text rendering is sometimes hard to read. Text can be too small. The free fonts I grabbed can get distracting. My streaming rig isn’t quite up to 60 frames per second at 4K. (Paperspace helped, there.)

I also want to acknowledge that, as a viewer, this kind of experience would be so much more interesting with human storytellers using their human creativity. That an AI is doing the storytelling is an interesting novelty. The fact that it doesn’t completely fail is thought-provoking. (Meanwhile, do check out a storytelling event in your community if you’re into this kind of thing.)

Wrap-up

I really enjoyed building this generative choose-your-own-adventure storybook. Generative AI is weird and powerful and fascinating. This particular project ended up being a kitchen sink of features that accumulated on what was, in the end, a “meh” concept. I can already see clever folks on Twitter playing playing with similar ideas and getting impressive results.

I’m still not too worried about human writers and artists…for now. While the quality might be a bit better with the most powerful language and images models out there, for now, such models are either inaccessible or expensive. That said, technology, including generative AI, tends to get better and cheaper with time. I wonder how this approach would fare in a year or three…

To quote Dr. Zsolnai-Fehér, what a time to be alive! This stuff is amazing, scary, silly and sinister. These models fail (and occasionally brilliantly succeed) in all sorts of interesting, weird ways. While they are stochastic they do have predictable behaviors. It really matters how you use them. You are rewarded for systematic exploration. The effect you want might just be a prompt away! I hope you can learn from some of my misadventures above–and here’s to heaiding out for some more. Let’s keep hacking on these frontiers of AI and experience ~

Appendix: footage

Here’s some more footage of the “infinite storyteller” experience:

…and there’s a remote chance I’m tinkering and broadcasting on Twitch.tv now.